In the rapidly evolving world of artificial intelligence (AI) and machine learning (ML), selecting the right hardware is critical to unlocking the full potential of your projects. With workloads becoming increasingly complex, the GPU you choose can dramatically impact your efficiency and results. Two powerful contenders in this space are NVIDIA’s A100-SXM4-40GB and L40 GPUs. Both are tailored for demanding tasks, yet each has distinct strengths and focuses.

In this article, we will compare these GPUs in detail. We will explore their unique capabilities, ideal use cases, and key differentiators. By doing so, we aim to help you make an informed decision.

The Role of GPUs in AI and ML

GPUs have revolutionized AI and ML by providing the computational power needed to process vast datasets and perform complex calculations in parallel. Unlike CPUs, which handle tasks sequentially, GPUs excel in parallel processing. This distinction makes GPUs indispensable for training deep learning models and running AI-driven applications. Consequently, their ability to process multiple tasks simultaneously significantly enhances the efficiency of these processes.

The choice of GPU can greatly influence the speed and efficiency of your AI and ML workflows. Key factors to consider include core architecture, memory, power consumption, and how well the GPU integrates with your existing infrastructure.

NVIDIA A100-SXM4-40GB: Powerhouse for Large-Scale AI

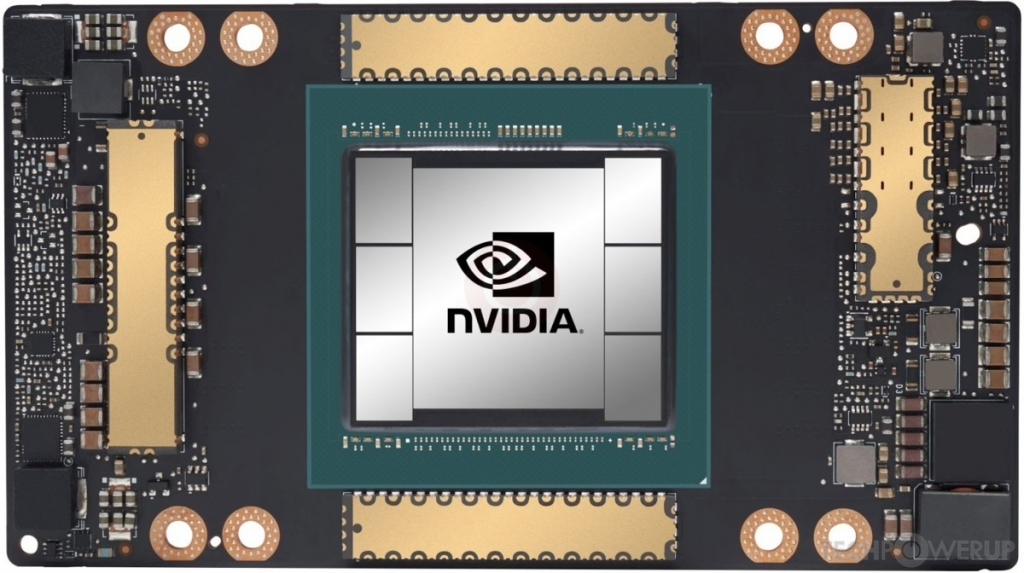

The NVIDIA A100-SXM4-40GB is a flagship GPU from NVIDIA, optimized for intensive AI, ML, and data analytics workloads. Built on the Ampere architecture, it offers high performance with 6,912 CUDA cores and 432 Tensor Cores, making it one of the most powerful GPUs available.

Key Specifications:

- Architecture: Ampere

- CUDA Cores: 6,912

- Tensor Cores: 432

- Memory: 40GB HBM2

- Memory Bandwidth: 1.6TB/s

- TDP: 400W

Performance Highlights:

The A100 is engineered to speed up AI model training and inference. It handles massive datasets with ease due to its high memory bandwidth. This GPU excels in environments needing both computational power and scalability, such as large data centers and cloud platforms. Its Multi-Instance GPU (MIG) technology allows partitioning into smaller, isolated instances for flexibility.

NVIDIA L40: Balancing AI and Graphics

The NVIDIA L40 is a newer entrant, built on the Ada Lovelace architecture. It handles both AI workloads and graphics-intensive tasks exceptionally well. This versatility makes it suitable for industries needing high-performance graphics along with AI processing.

Key Specifications:

- Architecture: Ada Lovelace

- CUDA Cores: Up to 18,432 (varies by configuration)

- Tensor Cores: Up to 576 (varies by configuration)

- Memory: 48GB GDDR6

- Memory Bandwidth: 1TB/s

- TDP: 300W

Performance Highlights:

The L40 balances AI and graphics performance, making it ideal for gaming, simulation, and autonomous vehicles. Its lower power consumption offers greater energy efficiency compared to the A100, which is beneficial in environments where power costs are a concern.

A Comparative Look: A100-SXM4-40GB vs. L40

Here’s a side-by-side comparison of the A100-SXM4-40GB and L40 GPUs:

| Feature | NVIDIA A100-SXM4-40GB | NVIDIA L40 |

|---|---|---|

| Architecture | Ampere | Ada Lovelace |

| CUDA Cores | 6,912 | Up to 18,432 (configurable) |

| Tensor Cores | 432 | Up to 576 (configurable) |

| Memory | 40GB HBM2 | 48GB GDDR6 |

| Memory Bandwidth | 1.6TB/s | 1TB/s |

| TDP | 400W | 300W |

| MIG Support | Yes | No |

| NVLink Support | Yes | No |

| FP16 Performance | 312 TFLOPS | Estimated at 147 TFLOPS |

| Cooling Requirements | High | Moderate |

| Release Year | 2020 | 2023 |

| Primary Use Cases | AI/ML Training, Data Analytics | AI Inference, Graphics-Intensive Workloads |

| Cost | High | Mid-to-high |

| Scalability | Excellent | Good |

| Energy Efficiency | Moderate | High |

Real-World Applications: Where Do They Shine?

A100-SXM4-40GB: Best for Research and Large-Scale AI

The A100 is ideal for large-scale AI research and enterprise-level deployments. It handles vast datasets and complex computations with exceptional speed. This GPU is crucial for tasks like natural language processing, autonomous driving simulations, and scientific research.

L40: Ideal for AI-Driven Graphics and Edge Deployments

The L40 balances AI and graphics performance. It excels in rendering AI-driven visualizations, making it perfect for gaming and virtual reality. Additionally, its energy efficiency makes it a strong candidate for edge computing setups.

Scalability and Integration

A100-SXM4-40GB:

The A100 offers outstanding scalability, making it suitable for multi-GPU setups in large data centers. Its NVLink support allows seamless communication between GPUs, which is essential for scaling up complex AI tasks.

L40:

The L40 is also scalable but is designed for balanced workloads. It works well in compact setups that need both AI and graphics processing. Its lower power requirements simplify integration into existing systems.

Cost and Investment Considerations

It is obvious that while choosing between these two GPUs, cost is a significant factor. The A100 commands a higher price, justified by its superior performance in training and data analytics. However, if your work requires a mix of AI and graphics with an eye on energy consumption, the L40 offers a more balanced cost-to-performance ratio.

Software Ecosystem and Compatibility

Both GPUs support NVIDIA’s CUDA platform and major AI frameworks like TensorFlow, PyTorch, and MXNet. The A100 is optimized for mixed-precision AI workloads, making it ideal for heavy training tasks. The L40 excels in environments requiring high-performance graphics alongside AI capabilities.

Future-Proofing Your AI Infrastructure

Investing in either the A100 or L40 prepares your AI infrastructure for future demands. The A100’s architecture, specifically designed to handle the growing complexity of AI workloads, excels in managing these challenges. On the other hand, the L40’s energy efficiency makes it a strong candidate as industries increasingly prioritize sustainable computing. As a result, both GPUs offer long-term value, catering to different but equally important needs.

Challenges to Consider

Cooling and Power Requirements:

The A100’s power consumption needs advanced cooling solutions, increasing infrastructure costs. In contrast, the L40’s lower TDP results in fewer thermal challenges, making it suitable for environments with limited cooling.

Integration and Compatibility:

Both GPUs require compatibility checks with your current setup. Specifically, the A100 may demand more significant infrastructure upgrades due to its higher power requirements. In contrast, the L40 is generally easier to integrate into existing setups. Thus, it offers a more seamless implementation for many users.

Conclusion: Which GPU Should You Choose?

Choosing between the NVIDIA A100-SXM4-40GB and L40 depends on your specific AI and ML needs. The A100 is unmatched for large-scale AI training and data analytics, making it ideal for research institutions and large enterprises. On the other hand, the L40’s versatility in AI-driven graphics and energy efficiency makes it an excellent choice for gaming, simulation, and edge computing. In addition, its ability to balance high-performance graphics with energy savings provides significant advantages in these fields.

Meanwhile, regardless of which GPU you select, both are engineered to meet the highest demands of today’s AI and ML landscape. Consequently, they ensure your projects remain future-proof and capable of achieving breakthrough results.

Leave a Reply

You must be logged in to post a comment.