The NVIDIA H200 GPU is a state-of-the-art graphics processing unit engineered to deliver exceptional AI performance, efficiency, and ultra-low-latency inference capabilities. Designed to handle the most demanding AI workloads, the H200 accelerates deep learning, generative AI, and large-scale data processing with remarkable speed and precision.

In this article, we will explore the advanced features and benefits of the H200 GPU, examining how its high-bandwidth memory, enhanced compute power, and optimized inference architecture make it ideal for real-time applications. Additionally, we will discuss its role in industries such as healthcare, finance, and autonomous systems, highlighting how the H200 transforms AI-driven decision-making. Whether you’re optimizing AI models for edge computing or large-scale cloud deployments, the H200 stands as a key enabler of next-generation AI performance.

Introduction to the H200 GPU

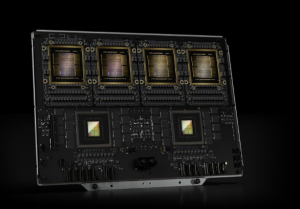

The H200 GPU is a high-performance computing device developed by leading technology companies. It is designed to accelerate artificial intelligence (AI) and machine learning (ML) workloads, providing real-time processing and low-latency inference capabilities. The H200 GPU is built on a powerful architecture, featuring a large number of cores, high-bandwidth memory, and advanced cooling systems.

Key Features of the H200 GPU

- High-Performance Computing: The H200 GPU is designed to deliver exceptional performance, making it ideal for demanding AI and ML workloads.

- Low-Latency Inference: The H200 GPU features low-latency inference capabilities, enabling real-time processing and decision-making.

- High-Bandwidth Memory: The H200 GPU is equipped with high-bandwidth memory, ensuring fast data transfer and processing.

- Advanced Cooling Systems: The H200 GPU features advanced cooling systems, ensuring optimal performance and reliability.

- Scalability: The H200 GPU is designed to be scalable, making it easy to integrate into existing infrastructure.

Applications of the H200 GPU

The H200 GPU has a wide range of applications, including:

- Artificial Intelligence (AI): The H200 GPU is ideal for AI workloads, enabling real-time processing and decision-making.

- Machine Learning (ML): The H200 GPU is designed to accelerate ML workloads, providing fast data transfer and processing.

- Deep Learning: The H200 GPU is suitable for deep learning applications, enabling real-time processing and decision-making.

- Computer Vision: The H200 GPU is ideal for computer vision applications, enabling real-time processing and decision-making.

- Autonomous Vehicles: The H200 GPU is designed to accelerate autonomous vehicle workloads, providing real-time processing and decision-making.

Advantages of the H200 GPU

The H200 GPU offers several advantages, including:

- Improved Performance: The H200 GPU delivers exceptional performance, making it ideal for demanding AI and ML workloads.

- Low-Latency Inference: The H200 GPU features low-latency inference capabilities, enabling real-time processing and decision-making.

- Increased Efficiency: The H200 GPU is designed to be energy-efficient, reducing power consumption and heat generation.

- Scalability: The H200 GPU is designed to be scalable, making it easy to integrate into existing infrastructure.

The H200 GPU is a cutting-edge graphics processing unit designed to deliver exceptional performance and low-latency inference capabilities. Its applications, advantages, and potential use cases make it an ideal choice for demanding AI and ML workloads. Whether you’re looking to accelerate autonomous vehicle workloads, computer vision applications, or deep learning, the H200 GPU is the perfect solution.

Future Developments

The H200 GPU is constantly evolving, with new features and improvements being added regularly. Some of the future developments include:

- Increased Performance: The H200 GPU is expected to deliver even higher performance, making it ideal for even more demanding AI and ML workloads.

- Improved Cooling Systems: The H200 GPU is expected to feature even more advanced cooling systems, ensuring optimal performance and reliability.

- Scalability: The H200 GPU is expected to be even more scalable, making it easier to integrate into existing infrastructure.

The H200 GPU is a game-changer in the world of AI and ML, offering exceptional performance and low-latency inference capabilities. Its applications, advantages, and potential use cases make it an ideal choice for demanding workloads. Whether you’re looking to accelerate autonomous vehicle workloads, computer vision applications, or deep learning, the H200 GPU is the perfect solution.

Conclusion

The NVIDIA H200 GPU unlocks low-latency inference with advanced architecture, high-bandwidth memory, and optimized AI performance. Its ability to process real-time data efficiently makes it ideal for industries like healthcare, finance, and autonomous systems.

As AI workloads grow, the H200 ensures seamless scalability and deployment across cloud and edge environments. With faster response times and improved efficiency, it empowers organizations to achieve high-speed AI inference, driving innovation and smarter decision-making.

Leave a Reply

You must be logged in to post a comment.