Federated learning is a machine learning approach that enables multiple devices or organizations to collaboratively train a shared model without sharing their local data. This approach has gained significant attention in recent years due to its potential to improve model accuracy, reduce data privacy concerns, and enhance collaboration among organizations. The NVIDIA H100 GPU is a powerful computing device that supports federated learning, making it an ideal choice for organizations looking to implement this approach.

What is Federated Learning?

Federated learning is a distributed machine learning approach that enables multiple devices or organizations to collaboratively train a shared model. Each device or organization contributes its local data to the shared model, but the data remains on the device or organization’s premises. This approach has several benefits, including:

- Improved model accuracy: By combining data from multiple sources, federated learning can improve model accuracy and reduce overfitting.

- Enhanced data privacy: Federated learning enables organizations to maintain control over their local data, reducing the risk of data breaches and unauthorized data sharing.

- Increased collaboration: Federated learning enables organizations to collaborate on machine learning projects without sharing their local data, promoting knowledge sharing and innovation.

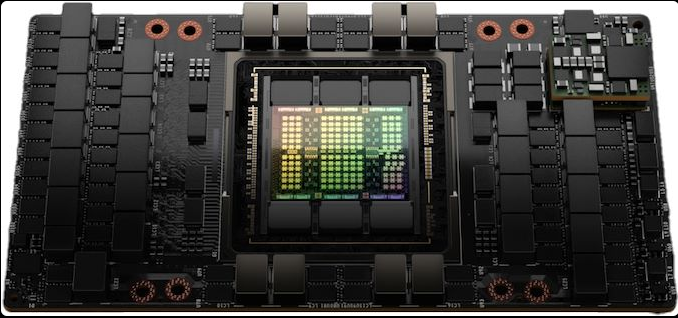

Key Specifications of H100 GPU

- CUDA Cores: 16,896 cores, delivering high-performance parallel computing for distributed AI training.

- Tensor Cores: 528 fourth-generation Tensor Cores, accelerating deep learning and encrypted model training.

- Memory Capacity: 80GB HBM3, enabling faster processing of large AI models across decentralized networks.

- Memory Bandwidth: Up to 3.35TB/s, ensuring seamless data transfer for federated learning workloads.

- Confidential Computing: Supports secure multi-party computation (MPC) and homomorphic encryption for privacy-preserving AI.

- NVLink Support: Up to 900GB/s interconnect, enabling high-speed multi-GPU scaling in federated learning frameworks.

- Compute Performance: 60TFLOPS FP64, 120TFLOPS TF32, and 1,980TFLOPS FP8, optimized for AI training and inference.

- AI Software Stack: Compatible with NVIDIA FLARE, TensorFlow, PyTorch, and other federated learning frameworks.

- PCIe & Connectivity: Supports PCIe 5.0, reducing latency in distributed AI systems.

- Energy Efficiency: 700W TDP, with advanced cooling solutions for sustained AI workloads.

The H100 GPU empowers federated learning by enabling secure, high-performance AI training across multiple decentralized nodes, ensuring data privacy, scalability, and efficient AI model collaboration.

How Does the NVIDIA H100 GPU Support Federated Learning?

The NVIDIA H100 GPU is a powerful computing device that supports federated learning through several features:

- Multi-GPU Support: The H100 GPU supports multiple GPUs, enabling organizations to scale their federated learning workloads and improve performance.

- Tensor Cores: The H100 GPU features tensor cores, which accelerate matrix operations and improve performance in federated learning workloads.

- Memory and Bandwidth: The H100 GPU has a large memory capacity and high bandwidth, enabling organizations to handle large datasets and improve performance in federated learning workloads.

- Software Support: The H100 GPU is supported by NVIDIA’s software stack, including the NVIDIA Deep Learning SDK and the NVIDIA TensorRT SDK, which provide tools and libraries for building and deploying federated learning models.

Benefits of Using the NVIDIA H100 GPU for Federated Learning

The NVIDIA H100 GPU offers several benefits for organizations implementing federated learning, including:

- Improved Performance: The H100 GPU’s multi-GPU support, tensor cores, and large memory capacity enable organizations to improve performance in federated learning workloads.

- Enhanced Scalability: The H100 GPU’s multi-GPU support enables organizations to scale their federated learning workloads and handle large datasets.

- Increased Collaboration: The H100 GPU’s software support and multi-GPU capabilities enable organizations to collaborate on machine learning projects and share knowledge.

- Improved Data Privacy: The H100 GPU’s support for federated learning enables organizations to maintain control over their local data and reduce the risk of data breaches.

The NVIDIA H100 GPU is a powerful computing device that supports federated learning, making it an ideal choice for organizations looking to implement this approach. With its multi-GPU support, tensor cores, large memory capacity, and software support, the H100 GPU enables organizations to improve performance, enhance scalability, increase collaboration, and improve data privacy in federated learning workloads.

Future Directions

The future of federated learning is exciting, with several directions for research and development. Some potential areas of focus include:

- Improved Model Accuracy: Researchers are working to improve model accuracy in federated learning by developing new algorithms and techniques.

- Enhanced Data Privacy: Researchers are working to improve data privacy in federated learning by developing new techniques for secure data sharing and collaboration.

- Increased Scalability: Researchers are working to improve scalability in federated learning by developing new algorithms and techniques for handling large datasets.

The NVIDIA H100 GPU is a powerful tool for organizations looking to implement federated learning. With its support for multi-GPU, tensor cores, large memory capacity, and software support, the H100 GPU enables organizations to improve performance, enhance scalability, increase collaboration, and improve data privacy in federated learning workloads.

Conclusion

The NVIDIA H100 GPU is redefining federated learning by providing unmatched computational power, scalability, and security. With its advanced Tensor Core architecture and high-speed interconnects, the H100 accelerates distributed AI training while preserving data privacy. This makes it a game-changer for industries like healthcare, finance, and edge computing, where secure collaboration is crucial. By optimizing communication and reducing latency in multi-node environments, the H100 ensures seamless and efficient federated learning workflows. As the demand for decentralized AI grows, the H100 stands as a cornerstone for the future of secure, high-performance machine learning.

Leave a Reply

You must be logged in to post a comment.