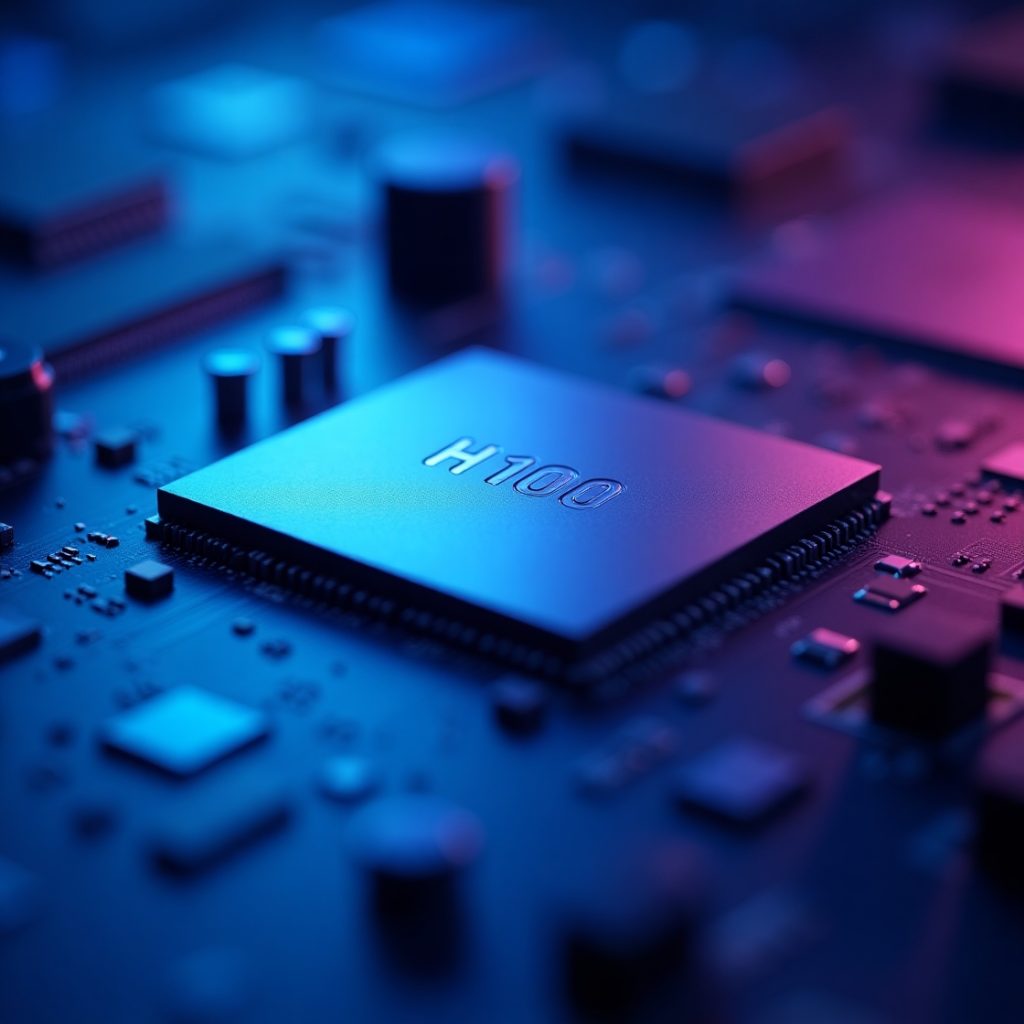

The rapid advancement of artificial intelligence (AI) has led to the development of sophisticated generative models like GPT and DALL-E. These models have revolutionized the way we interact with technology, enabling applications such as language translation, image generation, and text summarization. However, training and running these models requires significant computational resources, making high-performance GPUs essential for their development and deployment. In this article, we will explore the H100 GPU, a cutting-edge solution designed specifically for generative AI models like GPT and DALL-E.

What is the H100 GPU?

The H100 GPU is a high-performance computing (HPC) accelerator designed by NVIDIA, a leading provider of AI and deep learning solutions. The H100 GPU is built on the NVIDIA Hopper architecture, which offers significant improvements in performance, power efficiency, and scalability. The H100 GPU is designed to accelerate a wide range of AI workloads, including generative models like GPT and DALL-E.

Key Features of the H100 GPU

The H100 GPU boasts several key features that make it an ideal solution for generative AI models:

1.Massive Memory: The H100 GPU features a massive 80 GB of HBM3 memory, providing ample storage for large AI models and datasets.

2.High-Bandwidth Memory: The H100 GPU’s HBM3 memory offers a bandwidth of up to 3.5 TB/s, enabling fast data transfer and efficient model training.

3.Multi-Instance GPU: The H100 GPU supports multiple instances, allowing users to run multiple AI workloads simultaneously and increasing overall system utilization.

4.Advanced Cooling System: The H100 GPU features an advanced cooling system, which ensures optimal performance and reliability even in high-temperature environments.

Generative AI Models and the H100 GPU

Generative AI models like GPT and DALL-E require significant computational resources to train and run. These models are designed to generate new data, such as text or images, based on patterns learned from large datasets. The H100 GPU is well-suited for these workloads, offering the necessary performance and memory to accelerate model training and inference.

GPT and the H100 GPU

GPT (Generative Pre-trained Transformer) is a popular language model developed by OpenAI. GPT is designed to generate human-like text based on input prompts, and it has been widely used in applications such as language translation, text summarization, and chatbots. The H100 GPU is an ideal solution for GPT, providing the necessary performance and memory to accelerate model training and inference.

DALL-E and the H100 GPU

DALL-E is a generative model developed by NVIDIA, designed to generate images based on text prompts. DALL-E has been widely used in applications such as image generation, image editing, and art creation. The H100 GPU is well-suited for DALL-E, offering the necessary performance and memory to accelerate model training and inference.

Real-World Applications of the H100 GPU

The H100 GPU has a wide range of real-world applications, including:

1.Language Translation: The H100 GPU can accelerate language translation workloads, enabling fast and accurate translation of text and speech.

2.Image Generation: The H100 GPU can accelerate image generation workloads, enabling fast and accurate generation of images based on text prompts.

3.Text Summarization: The H100 GPU can accelerate text summarization workloads, enabling fast and accurate summarization of long documents.

4. Chatbots: The H100 GPU can accelerate chatbot workloads, enabling fast and accurate responses to user queries.

Conclusion

The H100 GPU is a cutting-edge solution designed specifically for generative AI models like GPT and DALL-E. With its massive memory, high-bandwidth memory, multi-instance GPU, and advanced cooling system, the H100 GPU offers the necessary performance and reliability to accelerate model training and inference. The H100 GPU has a wide range of real-world applications, including language translation, image generation, text summarization, and chatbots. As AI continues to evolve, the H100 GPU will play a critical role in accelerating the development and deployment of sophisticated AI models.

Leave a Reply

You must be logged in to post a comment.