Artificial Intelligence (AI) and Machine Learning (ML) are rapidly shaping the future of technology. This is driving a profound demand for high-performance graphics processing units (GPUs). As researchers and developers seek to push the boundaries of AI, the choice of GPU becomes crucial. Among the top contenders in the mid-range GPU market are the GeForce RTX 4070 and the NVIDIA L4. In this comprehensive guide, we’ll dive deep into these two GPUs. We’ll be comparing their architecture, performance, software compatibility, power efficiency, and their overall suitability for various AI and ML workloads.

Setting the Scene: The Rising Demand for AI-Optimized GPUs

Imagine a world where AI is seamlessly integrated into every facet of daily life, from autonomous vehicles to intelligent personal assistants. The backbone of this AI revolution? GPUs that can handle massive data processing and complex algorithms with ease. As more industries adopt AI, the demand for GPUs that can deliver top-notch performance while remaining energy-efficient has skyrocketed. Enter the GeForce RTX 4070 and NVIDIA L4—two GPUs designed to meet these growing needs, each with its own strengths and target audience.

A Closer Look at the Architecture and Specifications

GeForce RTX 4070: The Powerhouse for Versatile Applications

Built on NVIDIA’s Ampere architecture, the GeForce RTX 4070 is engineered to provide significant improvements over previous generations. Key specifications include:

- CUDA Cores: 5888

- Tensor Cores: 184

- RT Cores: 46

- Base Clock: 1.5 GHz

- Boost Clock: 1.8 GHz

- Memory: 8GB GDDR6

- Memory Bandwidth: 448 GB/s

The RTX 4070 excels in a wide range of applications, from gaming to rendering and, importantly, AI and ML tasks. The integration of second-generation Ray Tracing (RT) cores and third-generation Tensor Cores makes it a versatile option for developers and researchers alike.

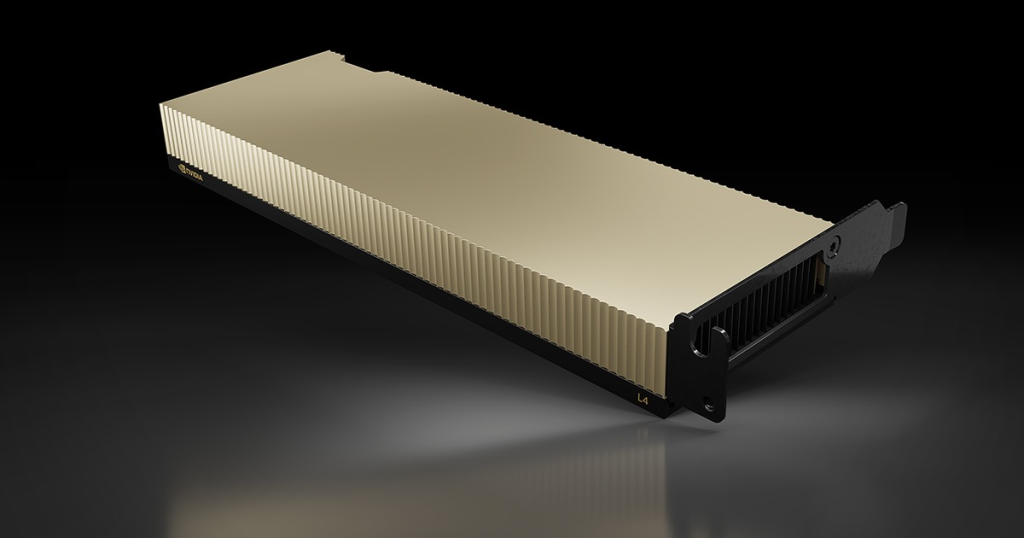

NVIDIA L4: Enterprise-Grade Performance for Intensive Workloads

The NVIDIA L4, part of the Ada Lovelace architecture, is tailored specifically for enterprise environments where intensive computational tasks are the norm. Key specifications include:

- CUDA Cores: 6144

- Tensor Cores: 192

- RT Cores: 48

- Base Clock: 1.3 GHz

- Boost Clock: 1.7 GHz

- Memory: 16GB GDDR6

- Memory Bandwidth: 512 GB/s

Designed for scalability, the L4 offers robust support for AI training and inference workloads, making it ideal for data centers and large organizations.

NVIDIA L4 vs. GeForce RTX 4070: A Head-to-Head Comparison

To truly understand how these GPUs stack up, let’s explore a detailed comparison chart highlighting their key features and differences:

| Feature | NVIDIA L4 | GeForce RTX 4070 |

|---|---|---|

| Architecture | Ada Lovelace | Ampere |

| CUDA Cores | 6144 | 5888 |

| Tensor Cores | 192 | 184 |

| RT Cores | 48 | 46 |

| Base Clock | 1.3 GHz | 1.5 GHz |

| Boost Clock | 1.7 GHz | 1.8 GHz |

| Memory | 16GB GDDR6 | 8GB GDDR6 |

| Memory Bandwidth | 512 GB/s | 448 GB/s |

| Power Consumption | 72W | 285W |

| Form Factor | Single-slot | Dual-slot |

| Target Market | Enterprise | Consumer/Professional |

Training and Inference: The Core of AI Performance

Performance in Training: Tackling ML Models

Training AI models is one of the most resource-intensive tasks in the field. Both the RTX 4070 and L4 bring significant power to the table, but their capabilities shine in different areas.

- GeForce RTX 4070: Ideal for small to medium-sized model training, the RTX 4070’s 184 Tensor Cores excel at matrix multiplications, crucial for deep learning.

- NVIDIA L4: The L4, with more Tensor Cores and higher memory bandwidth, is designed for large-scale training. It handles extensive datasets and complex models better.

Inference Performance: Deploying AI in Real-Time

Inference, or the process of deploying trained AI models, is critical for applications that require real-time processing.

- GeForce RTX 4070: Perfect for real-time inference tasks such as chatbots and recommendation systems, where responsiveness is key.

- NVIDIA L4: More suitable for large-scale inference tasks, such as processing massive datasets in real-time or serving high-traffic AI applications.

Compatibility with Software Ecosystems

Deep Learning Frameworks: Building and Training AI Models

Both the RTX 4070 and L4 are compatible with popular deep learning frameworks like TensorFlow, PyTorch, and Keras, ensuring a smooth development process.

- GeForce RTX 4070: Well-supported in the consumer space, the RTX 4070 benefits from extensive community resources and compatibility with a broad range of software, including gaming and creative tools.

- NVIDIA L4: Targeted at enterprise users, the L4 is optimized for professional-grade software, offering robust support for enterprise AI frameworks and applications.

Developer Tools and Support: Enhancing the Development Experience

NVIDIA provides a wealth of tools and libraries to support developers working with both GPUs.

- GeForce RTX 4070: Includes access to NVIDIA’s CUDA Toolkit, cuDNN, and TensorRT, making it easier to develop and optimize AI models.

- NVIDIA L4: In addition to these tools, the L4 offers enterprise-level support, including access to NVIDIA NGC (NVIDIA GPU Cloud), which provides pre-trained models and containers to streamline AI application deployment.

Power Efficiency and Thermal Management: The Balance of Performance and Sustainability

Efficiency is a critical factor when choosing a GPU, particularly for workloads that run continuously.

- GeForce RTX 4070: While powerful, the RTX 4070 is designed for consumer use, which can result in higher power consumption and heat output during intensive tasks.

- NVIDIA L4: Built with data centers in mind, the L4 is engineered for optimal power efficiency and thermal management, ensuring reliable performance under sustained workloads.

Use Cases: Matching the GPU to Your Needs

GeForce RTX 4070: Versatility for a Broad Range of Applications

- Small to Medium ML Projects: Ideal for individual researchers, developers, and small teams working on less complex models.

- Real-Time Applications: The RTX 4070 excels in real-time inference tasks. This GPU is perfect for interactive AI, gaming AI, and AR/VR applications.

NVIDIA L4: Tailored for Enterprise-Level AI and ML

- Enterprise AI and ML: The L4 is best fit for large organizations and data centers requiring robust AI capabilities and scalability.

- Big Data and Complex Models: It’s best suited for handling extensive datasets and complex models, offering the computational power needed for such tasks.

Conclusion: Choosing the Right GPU for Your AI Journey

In the quest to harness AI’s potential, choosing between the GeForce RTX 4070 and the NVIDIA L4 depends on your needs. The RTX 4070 is versatile and cost-effective for individual developers and small teams. The L4, on the other hand, delivers enterprise-grade performance and efficiency. It supports large-scale AI applications. Consider your project’s requirements, budget, and scalability when selecting the best GPU for your goals.

Leave a Reply

You must be logged in to post a comment.