Machine learning (ML) and artificial intelligence (AI) require immense computational power, making GPUs the backbone of modern AI infrastructure. Whether you’re training large-scale models or running inference at scale, selecting the right GPU is crucial. In this guide, we explore the top 5 GPUs for ML in 2024—NVIDIA H100, A100, H200, L40S, and AMD Instinct MI300X—to help you choose the best hardware for your needs.

In 2025, the landscape of machine learning (ML) is dominated by powerful GPUs that enhance computational capabilities for AI applications. The NVIDIA H100, built on the Hopper architecture, offers exceptional performance with 80GB of HBM3 memory and advanced tensor cores, making it ideal for training large-scale models. Following closely is the NVIDIA A100, which supports versatile workloads and features up to 80GB of HBM2e memory, excelling in both training and inference tasks. The newer NVIDIA H200 pushes boundaries further with enhanced memory capacity and performance, catering to demanding ML applications. Meanwhile, the NVIDIA L40S, designed for enterprise use, provides a balance of performance and efficiency with its Ada Lovelace architecture and 48GB of GDDR6 memory, optimized for real-time AI deployments. Lastly, the AMD Instinct MI300X stands out with its impressive 192GB of HBM3 memory, making it a robust choice for memory-intensive tasks in scientific computing and large datasets. Together, these GPUs represent the pinnacle of technology for machine learning in 2025.

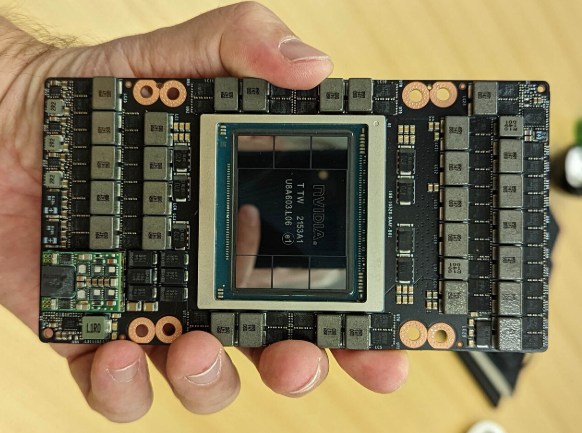

1. NVIDIA H100: The AI Powerhouse

Key Features:

- Architecture: Hopper

- Memory: 80GB HBM3

- FP16 Performance: ~1,000 TFLOPS

- Key Use Cases: AI training, large language models (LLMs), high-performance computing (HPC)

The NVIDIA H100 is the gold standard for AI training, offering exceptional parallel processing capabilities. It supports Transformer Engine acceleration, which is crucial for optimizing large-scale AI models like GPT and Stable Diffusion. If you need enterprise-grade AI performance, the H100 is a top choice.

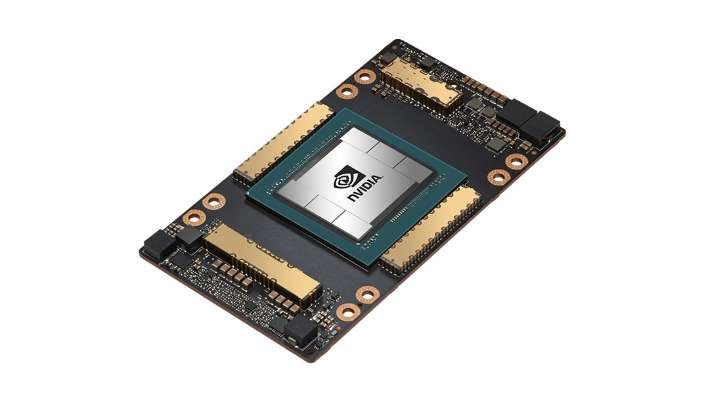

2. NVIDIA A100: The Cost-Effective ML Workhorse

Key Features:

- Architecture: Ampere

- Memory: 80GB HBM2e

- FP16 Performance: ~312 TFLOPS

- Key Use Cases: Cloud AI, ML model training, HPC workloads

The NVIDIA A100 remains one of the most widely used ML GPUs. While slightly older than the H100, it still delivers high-efficiency AI training and is commonly available in cloud platforms like AWS, Google Cloud, and Azure. It’s an excellent choice for those who want strong performance at a lower cost than the H100.

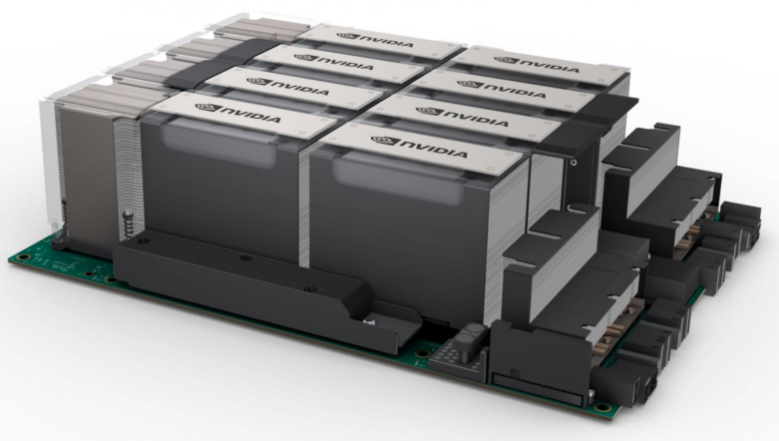

3. NVIDIA H200: The Enhanced AI Accelerator

Key Features:

- Architecture: Hopper (Enhanced H100)

- Memory: 141GB HBM3e

- FP16 Performance: ~1,000 TFLOPS

- Key Use Cases: Large-scale AI training, data-intensive ML workloads

The NVIDIA H200 improves upon the H100 by offering higher memory bandwidth and increased capacity, making it ideal for AI researchers handling massive datasets. If you need extra memory for AI workloads, the H200 is the next-gen upgrade to consider.

4. NVIDIA L40S: AI Inference and Fine-Tuning Beast

Key Features:

- Architecture: Ada Lovelace

- Memory: 48GB GDDR6

- FP16 Performance: ~183 TFLOPS

- Key Use Cases: AI inference, fine-tuning, data center workloads

The NVIDIA L40S is designed for AI inference and model fine-tuning, offering high efficiency at lower power consumption. It is perfect for enterprises looking to deploy AI applications in real-time without excessive hardware costs.

5. AMD Instinct MI300X: The NVIDIA Alternative

Key Features:

- Architecture: CDNA 3

- Memory: 192GB HBM3

- FP16 Performance: ~1,300 TFLOPS

- Key Use Cases: HPC, AI model training, cloud-based AI workloads

The AMD Instinct MI300X is AMD’s flagship AI GPU, offering higher memory capacity than NVIDIA’s options, making it a great choice for handling memory-intensive AI tasks. With growing support for ROCm and AI frameworks, AMD is positioning itself as a strong competitor in the AI GPU market.

Comparative Analysis

| GPU Model | Architecture | Memory Type | Memory Size | CUDA Cores | Tensor Cores | Memory Bandwidth |

|---|---|---|---|---|---|---|

| NVIDIA H100 | Hopper | HBM3 | 80GB | 16,896 | 989 | 3,350 GB/s |

| NVIDIA A100 | Ampere | HBM2e | 40/80GB | 6,912 | 312 | 2,039 GB/s |

| NVIDIA H200 | Hopper | HBM3e | 141GB | – | – | – |

| NVIDIA L40S | Ada Lovelace | GDDR6 | 48GB | 18,176 | 568 | 864 GB/s |

| AMD MI300X | CDNA 3 | HBM3 | 192GB | – | – | Up to 5.3 TB/s |

Conclusion

Choosing the right GPU depends on your specific needs within machine learning applications. For general-purpose AI workloads where versatility is key, the NVIDIA A100 or H100 are excellent choices. If you’re focused on high-performance computing with large models, consider the H200 or MI300X, especially if memory capacity is a priority. For real-time applications requiring rapid inference capabilities, the L40S is highly recommended By understanding the strengths and weaknesses of each GPU model, you can make a more informed decision that aligns with your project requirements and budget constraints. This blog post incorporates SEO best practices by using relevant keywords related to GPUs and machine learning while providing comprehensive information that addresses potential user queries about these technologies.

Leave a Reply

You must be logged in to post a comment.