The NVIDIA H100 Tensor Core GPUs, built on the groundbreaking Hopper architecture, are engineered to deliver unparalleled performance across AI, machine learning (ML), and high-performance computing (HPC) workloads. These GPUs come in various configurations tailored to specific computing needs, ensuring maximum efficiency and scalability. In this blog, we’ll explore the key variants of the H100: H100 SXM5, H100 PCIe, H100 NVL, DGX H100, and HGX H100, each designed to optimize different deployment scenarios.

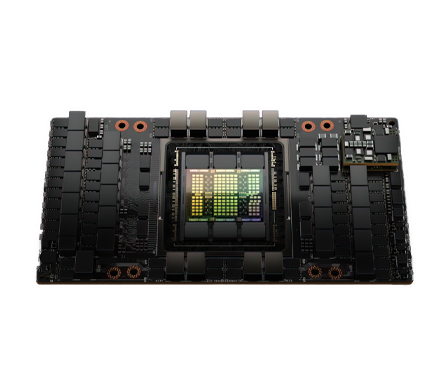

1. NVIDIA H100 SXM5: The Powerhouse for AI and HPC

The H100 SXM5 is one of the most powerful configurations in the H100 series. This variant is designed for high-performance AI workloads, with a focus on training complex deep learning models and accelerating scientific simulations.

- Key Features:

- Memory: 80GB of HBM2e memory with 3.2 TB/s bandwidth

- Interconnect: NVLink for efficient GPU-to-GPU communication

- Use Case: Ideal for large-scale AI training, data analytics, and simulations.

The SXM5 variant leverages the advanced NVLink and NVSwitch technology, enabling multiple GPUs to communicate at unprecedented speeds, crucial for handling the massive datasets used in AI training.

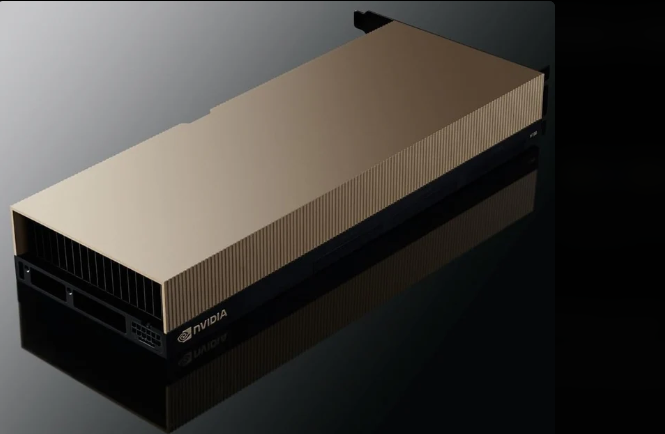

2. NVIDIA H100 PCIe: Flexibility and Compatibility for Data Centers

The H100 PCIe variant offers the flexibility of a standard PCIe card, making it a preferred choice for many data centers and enterprises that require customizable server configurations. This version provides the same powerful performance as the SXM5 but in a form factor that can be easily integrated into traditional servers.

- Key Features:

- Memory: 80GB of HBM2e memory

- Form Factor: Standard PCIe card, compatible with existing server infrastructure

- Use Case: Suitable for traditional cloud environments, enterprise deployments, and smaller-scale AI workloads.

With NVLink support available, it’s perfect for those who need scalability within a flexible architecture, without compromising on performance.

3. NVIDIA H100 NVL: Tailored for Large Language Models (LLMs)

The H100 NVL variant takes the power of the H100 and enhances it for AI-driven language models. With a dual-GPU configuration and 188GB of memory, the NVL variant is optimized for training and running large language models such as GPT-4.

- Key Features:

- Memory: 188GB of memory (94GB per GPU)

- Design: Dual-GPU configuration to handle more complex models

- Use Case: Best for generative AI applications and large-scale LLMs.

The H100 NVL’s large memory capacity is perfect for high-demand AI tasks, offering better scalability for resource-intensive models.

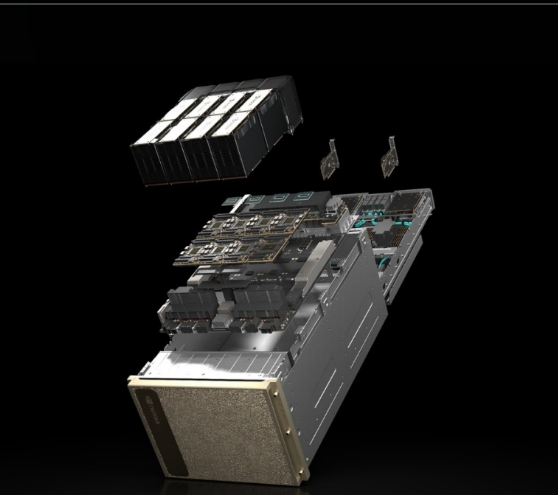

4. NVIDIA DGX H100: A Complete AI Supercomputer Solution

For those looking for an integrated AI solution, the DGX H100 offers a complete system designed to maximize AI and HPC performance. This system is pre-configured with eight H100 GPUs, making it an ideal choice for research institutions and enterprises that need to handle massive amounts of data processing.

- Key Features:

- Configuration: 8 H100 GPUs in a single unit

- Interconnect: NVLink and NVSwitch for high-speed GPU-to-GPU communication

- Use Case: Perfect for research and data centers running large-scale AI models, simulations, and scientific computing.

The DGX H100 is the all-in-one solution for organizations that want to deploy an AI supercomputer with minimal configuration, delivering incredible performance out of the box.

5. NVIDIA HGX H100: Scalable AI for the Cloud

The HGX H100 is designed for scalable AI workloads in the cloud. It is typically deployed with up to 8 H100 GPUs, offering immense parallel processing power. The HGX platform is optimized for cloud service providers and large enterprises that need to scale their AI applications quickly.

- Key Features:

- Configuration: Can support up to 8 H100 GPUs per server

- Connectivity: NVLink, NVSwitch, and Quantum InfiniBand for ultra-fast data transfers

- Use Case: Best for cloud service providers and enterprises needing scalability for AI, ML, and HPC.

With its highly flexible design and the ability to scale, the HGX H100 is a great fit for organizations looking to quickly scale their cloud-based AI applications.

Which H100 Variant is Right for You?

Choosing the right H100 variant depends on your specific workload requirements. Whether you need the high-memory capacity of the H100 NVL for generative AI, the flexibility of the H100 PCIe for data centers, or the full AI supercomputer experience with the DGX H100, NVIDIA offers a solution tailored to your needs.

Each of these variants pushes the boundaries of GPU technology, helping organizations accelerate AI research, development, and deployment. With NVIDIA’s Hopper architecture powering them, these GPUs offer unmatched performance and scalability for modern AI and HPC workloads.

Conclusion

From the H100 SXM5 for intensive AI training to the DGX H100 for integrated AI supercomputing, NVIDIA offers a range of powerful GPUs to meet the demands of different use cases. Whether you are in AI, deep learning, or scientific computing, the H100 series delivers the power and scalability you need to accelerate innovation.

Leave a Reply

You must be logged in to post a comment.