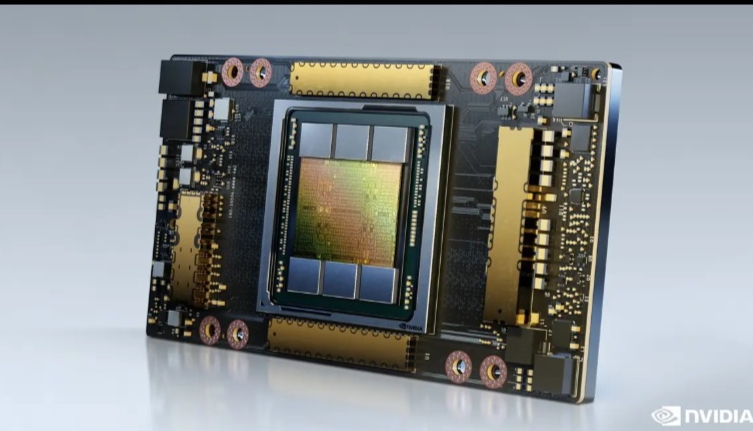

The NVIDIA GH100 GPU, part of the groundbreaking Hopper architecture, represents a significant leap in GPU technology. Designed to address the increasing demands of AI, high-performance computing (HPC), and data center workloads, the GH100 is set to redefine performance benchmarks in the tech industry.

A Deep Dive into Hopper Architecture

The GH100 is the flagship GPU of NVIDIA’s Hopper architecture, which was purpose-built for next-generation AI and HPC applications. Named after Grace Hopper, a pioneer in computer programming, this architecture introduces a host of innovations that make it one of the most powerful and efficient GPUs to date.

Key Features of GH100:

- Transformer Engine: The GH100 introduces a dedicated Transformer Engine, designed specifically to accelerate AI and machine learning tasks. It delivers unmatched performance for natural language processing (NLP) and large-scale recommendation systems, which rely heavily on transformer models.

- FP8 Precision: Hopper architecture introduces FP8 (8-bit floating point) precision, a game-changing feature for AI workloads. This reduces memory and compute requirements without sacrificing model accuracy, enabling faster training and inference.

- NVLink and NVSwitch: With the latest NVLink and NVSwitch interconnects, the GH100 ensures seamless scalability. It enables multiple GPUs to work together efficiently, making it ideal for large-scale AI models and HPC tasks.

- H100 Tensor Cores: The GH100 leverages upgraded Tensor Cores, which are optimized for mixed-precision computing. This ensures superior performance across a wide range of workloads, from scientific simulations to AI model training.

- Memory Bandwidth: With a staggering memory bandwidth of up to 3TB/s, the GH100 handles massive datasets with ease, ensuring high-speed data transfer and reduced bottlenecks.

Here’s a tabular representation of the key specifications for the NVIDIA GH100 GPU:

| Specification | Value |

|---|---|

| GPU Processing Clusters (GPCs) | 8 |

| Texture Processing Clusters (TPCs) | 72 (9 TPCs per GPC) |

| Streaming Multiprocessors (SMs) | 144 (2 SMs per TPC) |

| FP32 CUDA Cores | 18,432 (128 per SM) |

| Fourth-Generation Tensor Cores | 576 (4 per SM) |

| Memory Configuration | 6 HBM3 or HBM2e stacks |

| Memory Controllers | 12 (512-bit each) |

| L2 Cache | 60 MB |

| Peak FP64 Performance | 33.5 TFLOPS (SXM5), 25.6 TFLOPS (PCIe) |

| Peak FP32 Performance | 66.9 TFLOPS (SXM5), 51.2 TFLOPS (PCIe) |

| Peak Tensor Core Performance (TF32) | 494.7 TFLOPS (SXM5), 378 TFLOPS (PCIe) |

| Memory Bandwidth | Up to 3.35 TB/s |

| NVLink and PCIe Support | Fourth-Generation NVLink and PCIe Gen 5 |

GH100 vs. Previous Generations

Compared to the NVIDIA A100 from the Ampere architecture, the GH100 delivers:

- Up to 3x faster AI training performance.

- Enhanced energy efficiency, making it more sustainable for data center operations.

- Better scalability for massive multi-GPU deployments.

Real-World Applications of GH100

The GH100 GPU is a game-changer for industries such as:

- Healthcare: Accelerating drug discovery and genome sequencing with AI-driven analysis.

- Finance: Enabling real-time risk analysis and fraud detection using advanced machine learning models.

- Autonomous Vehicles: Powering the next generation of AI-driven autonomous systems with unparalleled processing power.

Architectural Overview

The GH100 GPU is built on a robust architecture that includes several key components:

- GPU Processing Clusters (GPCs): The GH100 comprises 8 GPCs.

- Texture Processing Clusters (TPCs): It features 72 TPCs, with 9 TPCs per GPC.

- Streaming Multiprocessors (SMs): Each TPC contains 2 SMs, totaling 144 SMs in the full GPU configuration.

- CUDA Cores: The architecture includes 128 FP32 CUDA cores per SM, amounting to 18,432 FP32 CUDA cores for the entire GPU.

- Tensor Cores: The GH100 integrates four fourth-generation Tensor Cores per SM, resulting in 576 Tensor Cores across the full GPU.

- Memory Configuration: The GPU supports either HBM3 or HBM2e memory stacks, with a total of 6 stacks and 12 memory controllers.

Why GH100 is Critical for the Future

As AI models grow larger and more complex, the demand for high-performance GPUs like the GH100 will only increase. The combination of the Hopper architecture’s innovations and NVIDIA’s commitment to pushing technological boundaries ensures that the GH100 will remain a cornerstone of AI and HPC development for years to come.

Performance Metrics

The performance capabilities of the GH100 are impressive:

- Peak Floating Point Performance:

- FP64: Up to 33.5 TFLOPS (SXM5) and 25.6 TFLOPS (PCIe)

- FP32: Up to 66.9 TFLOPS (SXM5) and 51.2 TFLOPS (PCIe)

- Tensor Core Performance:

- Peak TF32 Tensor Core: Up to 494.7 TFLOPS

- Peak FP16 Tensor Core: Up to 989.4 TFLOPS

- Peak BF16 Tensor Core: Up to 989.4 TFLOPS

- Peak FP8 Tensor Core: Up to 1978.9 TFLOPS

Applications

The GH100 is designed for a variety of applications in AI and HPC:

- AI Training and Inference: The architecture is optimized for training large AI models and performing inference tasks efficiently.

- Data Analytics: Its high throughput makes it suitable for processing vast amounts of data quickly.

- Scientific Simulations: The GH100 can handle complex simulations in fields such as genomics, climate modeling, and physics.

Conclusion

The NVIDIA GH100 GPU stands at the forefront of computing technology, delivering unparalleled performance for AI and HPC workloads. Its advanced architecture, combined with innovative features like fourth-generation Tensor Cores and support for high-bandwidth memory, makes it an essential tool for researchers and enterprises looking to leverage cutting-edge computational power.This detailed overview highlights the capabilities of the GH100 GPU while emphasizing its role in advancing technology in data centers and beyond.

Leave a Reply

You must be logged in to post a comment.